Hook into Wikipedia using Java and the MediaWiki API

The Mediawiki API makes it possible for web developers to access, search and integrate all Wikipedia content into their applications.

Given that Wikipedia is the ultimate online encyclopedia, there are dozens of use cases in which this might be useful.

I used to post a lot of articles about using the webservice APIS of third party sites on this blog. This is going to be another post like that.

This post describes how to use the Java Wikipedia API to fetch and format the contents of a Wikipedia article.

The Wikipedia API

The Wikipedia API makes it possible to interact with Wikipedia/Mediawiki through a webservice instead of the normal browserbased web interface.

The specific documentation for the English Wikipedia(the mediawiki api can be called on all Wikimedia sites, so not just Wikipedia itself, but also Wikimedia Commons etc..) is at http://en.wikipedia.org/w/api.php.

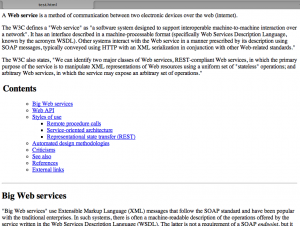

We cover a basic use case: getting the contents of the “Web service” article.

To fetch the contents for this article, the following url suffices:

http://en.wikipedia.org/w/api.php? format=xml&action=query&titles=Web%20service&prop=revisions&rvprop=content

A request to this url will return an xml document which includes the current wiki markup for the page titled “Web service”. As the request parameters indicate, these requests are highly configurable. For example, other formats than xml, such as json, are possible. For a full list of available parameters, visit http://en.wikipedia.org/w/api.php.

We are not going to construct these urls ourselves. We are going to use bliki, the Java wikipedia API library instead.

Getting the Java Wikipedia API lib

If you are using Maven you need to add the following repository to your pom:

<repository>

<id>info-bliki-repository</id>

<url>http://gwtwiki.googlecode.com/svn/maven-repository/</url>

<releases>

<enabled>true</enabled>

</releases>

</repository>

together with the following dependency:

<!-- bliki --> <dependency> <groupId>info.bliki.wiki</groupId> <artifactId>bliki-core</artifactId> <version>3.0.17</version> </dependency>

and if you want the addons:

<dependency> <groupId>info.bliki.wiki</groupId> <artifactId>bliki-addons</artifactId> <version>3.0.17</version> </dependency>

If you are not using Maven, just grab the jar from the linked project page.

Usage examples of this lib are at http://code.google.com/p/gwtwiki/wiki/HTML2Mediawiki.

Basic example: getting the contents of an article

However, the basic usage example given in the documentation, at this time, does not compile with the current version of the lib.

Therefore, we will start with a basic usage example of which no variant is listed there and extend this example.

We are going to list the code to fetch the content of the “Web service” page and render it as html. Note that to get a specific page, you need to know its title.

If the page does not exist, a result with one empty page will be returned.

For ambiguous titles, the disambiguation page will be given too, so even if you get a non-empty result, you still need to check it thoroughly.

String[] listOfTitleStrings = { "Web service" };

User user = new User("", "", "http://en.wikipedia.org/w/api.php");

user.login();

List<Page> listOfPages = user.queryContent(listOfTitleStrings);

for (Page page : listOfPages) {

WikiModel wikiModel = new WikiModel("${image}", "${title}");

String html = wikiModel.render(page.toString());

System.out.println(html);

}

We are instantiating a user on the English wikipedia endpoint. Since we are only going to read, we can login anonymously.

We query the english Wikipedia for the specified titles and get one page as result in the listOfPages variable.

We then instantiate a WikiModel. This class will render the html and its constructor parameters – imageBaseUrl and linkBaseUrl – determine where the rendered images and links will point too. For example, if you want these to point to local files, you would supply a local path. In the example, I made it completely relative. In the official documentation, these are “http://www.mywiki.com/wiki/${image}” and “http://www.mywiki.com/wiki/${title}”, which you would use if you were putting a Wikipedia copy at http://www.mywiki.com/wiki/.

We then render the page as html and print it out to the console.

The outputted rendering is very rudimentary and is far from complete though:

- Wikipedia magic variables, recognizable by their {{…}} markup, are not rendered. Instead, they are displayed literally.

- By default, all markup is rendered. However, you might need to leave certain parts out or modify the content a bit before it is displayed for your particular use case.

Handling magic variables

Most magic words are not supported by the Java Wikipedia API.

We need to implement their rendering ourselves.

If you want to do some advanced converting of the Wikipedia content, such as handling these magic words, you need to extend the WikiModel class. More info about this is at http://code.google.com/p/gwtwiki/wiki/Mediawiki2HTML.

This is what we are doing here:

package com.integratingstuff.wikimedia;

import java.util.Locale;

import java.util.Map;

import java.util.ResourceBundle;

import info.bliki.wiki.model.Configuration;

import info.bliki.wiki.model.WikiModel;

import info.bliki.wiki.namespaces.INamespace;

public class MyWikiModel extends WikiModel{

public MyWikiModel(Configuration configuration, Locale locale,

String imageBaseURL, String linkBaseURL) {

super(configuration, locale, imageBaseURL, linkBaseURL);

}

public MyWikiModel(Configuration configuration,

ResourceBundle resourceBundle, INamespace namespace,

String imageBaseURL, String linkBaseURL) {

super(configuration, resourceBundle, namespace, imageBaseURL, linkBaseURL);

}

public MyWikiModel(Configuration configuration, String imageBaseURL,

String linkBaseURL) {

super(configuration, imageBaseURL, linkBaseURL);

}

public MyWikiModel(String imageBaseURL, String linkBaseURL) {

super(imageBaseURL, linkBaseURL);

}

@Override

public String getRawWikiContent(String namespace, String articleName,

Map<String, String> templateParameters) {

String rawContent = super.getRawWikiContent(namespace, articleName, templateParameters);

if (rawContent == null){

return "";

}

else {

return rawContent;

}

}

}

The overriden getRawWikiContent in the above MyWikiModel code returns null for most magic words in its default implementation. A magic word such as {{InfoBox}} would pass through this code with the default namespace=”Template” and articleName=”InfoBox”. If null is returned, the magic word will be outputted in the rendered html as is(so for {{InfoBox}}, this would be {{InfoBox}}). So, the resulting html is full of these unreadable tags, which does not make it look pretty printed.

What we are doing in the above code to solve this is returning “” instead of null, so the magic word does not get rendered at all.

Nothing is stopping you from returning something else though.

Controlling the rendering of the html by implementing an ITextConverter

For my particular use case, I also did not want to render any html links, I did not want to render any references and I did not want to render any images. The WikiModel class does not implement support for leaving out these things. However, the overloaded render method of WikiModel can take an ITextConverter object as an argument, the object that is responsible for converting the parsed nodes to html(or another format, like pdf or plain text). The default ITextConverter, used when none is specified as an argument, is HTMLConverter, with its property noLinks set to false by default.

However, there is a HTMLConverter constructor which sets the noLinks boolean. By passing true to this constructor, no links will be rendered. Their content will be rendered as plain text instead.

Since I still had to leave out the reference and image elements, I still ended up subclassing the HTMLConverter.

First, I made a more extensible version of it:

package com.ceardannan.exams.wikimedia;

import info.bliki.htmlcleaner.ContentToken;

import info.bliki.htmlcleaner.EndTagToken;

import info.bliki.htmlcleaner.TagNode;

import info.bliki.htmlcleaner.Utils;

import info.bliki.wiki.filter.HTMLConverter;

import info.bliki.wiki.model.Configuration;

import info.bliki.wiki.model.IWikiModel;

import info.bliki.wiki.model.ImageFormat;

import info.bliki.wiki.tags.HTMLTag;

import java.io.IOException;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

/**

* A converter which renders the internal tree node representation as specific

* HTML text, but which is easier to change in behaviour than its superclass and

* has a noImages property, which can be set to leave out all images

*

*/

public class ExtendedHtmlConverter extends HTMLConverter {

private boolean noImages;

public ExtendedHtmlConverter() {

super();

}

public ExtendedHtmlConverter(boolean noLinks) {

super(noLinks);

}

public ExtendedHtmlConverter(boolean noLinks, boolean noImages) {

this(noLinks);

this.noImages = noImages;

}

protected void renderContentToken(Appendable resultBuffer,

ContentToken contentToken, IWikiModel model) throws IOException {

String content = contentToken.getContent();

content = Utils.escapeXml(content, true, true, true);

resultBuffer.append(content);

}

protected void renderHtmlTag(Appendable resultBuffer, HTMLTag htmlTag,

IWikiModel model) throws IOException {

htmlTag.renderHTML(this, resultBuffer, model);

}

protected void renderTagNode(Appendable resultBuffer, TagNode tagNode,

IWikiModel model) throws IOException {

Map<String, Object> map = tagNode.getObjectAttributes();

if (map != null && map.size() > 0) {

Object attValue = map.get("wikiobject");

if (!noImages) {

if (attValue instanceof ImageFormat) {

imageNodeToText(tagNode, (ImageFormat) attValue, resultBuffer, model);

}

}

} else {

nodeToHTML(tagNode, resultBuffer, model);

}

}

public void nodesToText(List<? extends Object> nodes,

Appendable resultBuffer, IWikiModel model) throws IOException {

if (nodes != null && !nodes.isEmpty()) {

try {

int level = model.incrementRecursionLevel();

if (level > Configuration.RENDERER_RECURSION_LIMIT) {

resultBuffer

.append("<span class=\"error\">Error - recursion limit exceeded rendering tags in HTMLConverter#nodesToText().</span>");

return;

}

Iterator<? extends Object> childrenIt = nodes.iterator();

while (childrenIt.hasNext()) {

Object item = childrenIt.next();

if (item != null) {

if (item instanceof List) {

nodesToText((List) item, resultBuffer, model);

} else if (item instanceof ContentToken) {

// render plain text content

ContentToken contentToken = (ContentToken) item;

renderContentToken(resultBuffer, contentToken, model);

} else if (item instanceof HTMLTag) {

HTMLTag htmlTag = (HTMLTag) item;

renderHtmlTag(resultBuffer, htmlTag, model);

} else if (item instanceof TagNode) {

TagNode tagNode = (TagNode) item;

renderTagNode(resultBuffer, tagNode, model);

} else if (item instanceof EndTagToken) {

EndTagToken node = (EndTagToken) item;

resultBuffer.append('<');

resultBuffer.append(node.getName());

resultBuffer.append("/>");

}

}

}

} finally {

model.decrementRecursionLevel();

}

}

}

protected void nodeToHTML(TagNode node, Appendable resultBuffer,

IWikiModel model) throws IOException {

super.nodeToHTML(node, resultBuffer, model);

}

}

The functionality of the above HTMLConverter is almost the same as the original one, but the code is divided into more methods, for easier overriding, and a noImages boolean is added as well, which leaves out all the images at render time if set to true.

And then I subclassed this class like this:

package com.ceardannan.exams.wikimedia;

import info.bliki.htmlcleaner.ContentToken;

import info.bliki.htmlcleaner.Utils;

import info.bliki.wiki.model.IWikiModel;

import info.bliki.wiki.tags.HTMLTag;

import java.io.IOException;

public class MyHtmlConverter extends ExtendedHtmlConverter {

public MyHtmlConverter() {

super();

}

public MyHtmlConverter(boolean noLinks) {

super(noLinks);

}

public MyHtmlConverter(boolean noLinks, boolean noImages) {

super(noLinks, noImages);

}

protected void renderContentToken(Appendable resultBuffer,

ContentToken contentToken, IWikiModel model) throws IOException {

String content = contentToken.getContent();

content = content.replaceAll("\\(,", "(").replaceAll("\\(\\)", "()");

content = Utils.escapeXml(content, true, true, true);

resultBuffer.append(content);

}

protected void renderHtmlTag(Appendable resultBuffer, HTMLTag htmlTag,

IWikiModel model) throws IOException {

String tagName = htmlTag.getName();

if ((!tagName.equals("ref"))) {

super.renderHtmlTag(resultBuffer, htmlTag, model);

}

}

}

If the converter encounters a “ref” html tag, it does not render the html tag. This results in no references getting rendered at all.

I also changed the rendering of the content a bit. This is because returning of “” for the magic words(see above), might leave (, or () in the text, and the line that replaces these cleans up the rendered html.

The code that we call to get the html is now:

MyWikiModel wikiModel = new MyWikiModel("${image}", "${title}");

String currentContent = page.getCurrentContent();

String html = wikiModel.render(

new MyHtmlConverter(true, true), currentContent);

The end result

We now have a properly formatted, completely offline article, with all its external links, images and references stripped:

In this blog, Steffen Luypaert gives tutorials on how to integrate Java frameworks and third-party APIs with each other.

In this blog, Steffen Luypaert gives tutorials on how to integrate Java frameworks and third-party APIs with each other.

I have some difficult to import info.bliki.wiki Library into Eclipse. I try to import Zip Archive in a new empity Project but when I make a new class I have an “import” error. Also I try to make new Build Path whit the same Zip Archive but incurring in the same erro.

Can you help me giving a simple guide how to use (import) Bliki Engine with (in) Eclipse??

I’m a beginner!

Thank you sooo much.

Was this answer helpful?

LikeDislikeHello, you need to import the jar in the zip into the project, not the zip itself. After downloading the zip, you need to extract it and put the bliki jar on your classpath.

Hello,

Everything worked pretty well for me, but I’m having trouble accessing some wikipedia articles. It work ok, for example, with Rome, but I can’t get it to access St. Marys’s College of Maryland. Is there any way to get the corect title or to pass directly the link to the api?

Thanks!

Was this answer helpful?

LikeDislikeHey Steffen,

I must say your explanation is very clear, and I always enjoy such concrete examples because they’re easy to implement, but for some reason this one won’t work for me.

There’s basically two scenarios:

- The one is where I try this piece of code with the links you provided (so the MediaWiki API page). I then get a connection time out. Most likely this has something to do with the proxy settings, I’ll have to ask IT about that.

- In the second scenario I try to access a local Wiki and get information from the Main Page. The problem is that no matter how many input Title Strings I give, I always get back 2 results and both have all “null” values.

Do these problems sound familiar to you? Is there any way around them that you know of?

Thanks in advance!

Was this answer helpful?

LikeDislikeHi Steffen,

Thanks for your code, it really very helpful,

I would like to ask if I can use your code for extracting page categories of any wiki page, and if the there is ambiguous results how I can solve this problem, to get accurate result.

Thanks in advance

Arwa

Was this answer helpful?

LikeDislikeHello,

thank you for your codes, it’s very helpfull,

for me, i search to have liste of pages that content the words of query and note the page that have the title the same as the query(i mean i must do the full text search in wikipedia) do you have any idea how to do this??

thank you

Was this answer helpful?

LikeDislikeThanks dude!….

Was this answer helpful?

LikeDislikeHi Steffen,

thanks for writting such great article.

Lukas

Was this answer helpful?

LikeDislikeThe examples are great. I’m trying to figure out how I can extract the XML of an article (instead of HTML in your example). I want to do this without the use of a XML dump. I want to obtain the XML for each article query. Can bliki do this?

Was this answer helpful?

LikeDislikehello sir,

i wand to add some new pages on my media wiki throw programming as you shown above please help….

thanks…

Was this answer helpful?

LikeDislikeHello,

please i’m searching for java API of wikipedia to extract the hits number of search of a term.

can this API help me!!

Was this answer helpful?

LikeDislikeI do not want to use Maven , my project goes very very slow when using maven .

I go to the website and at the download page all projects are source .

Can you provide the exact link to get the Jar files to run this ???

Was this answer helpful?

LikeDislikeHello,

I’m trying to use this library but I want to get wikipedia pages by key words not by article title, is it possible in this library?

Was this answer helpful?

LikeDislike